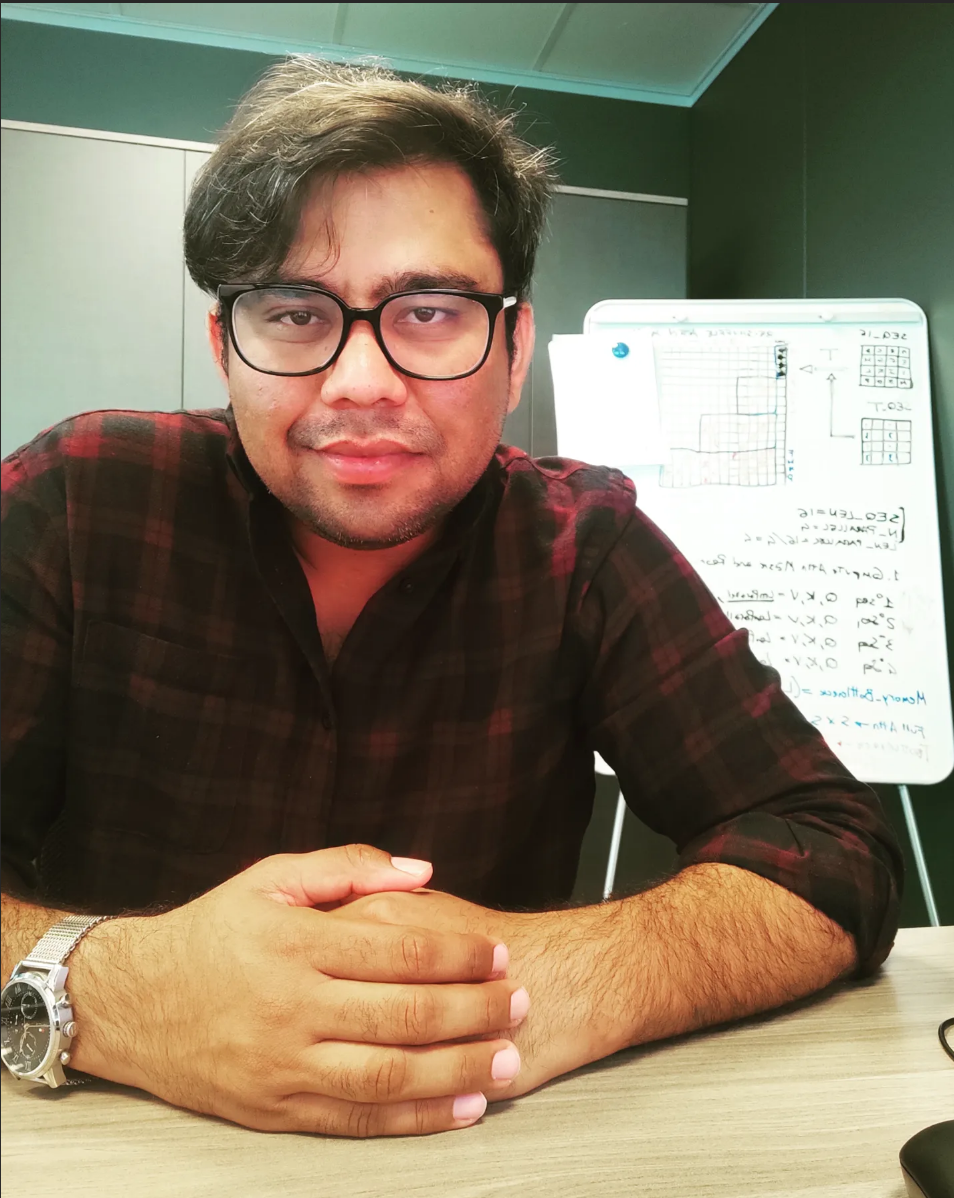

3D Vision & Robotics Researcher

Ph.D. · Former Postdoc · Industry Researcher

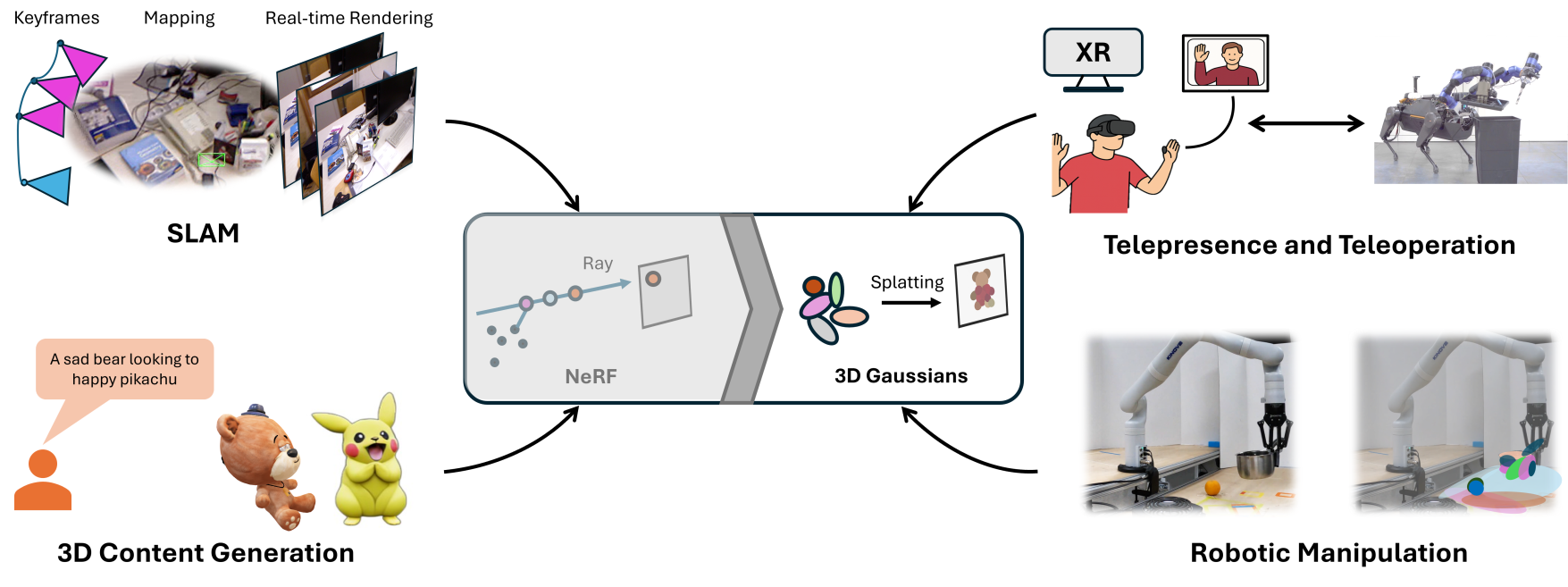

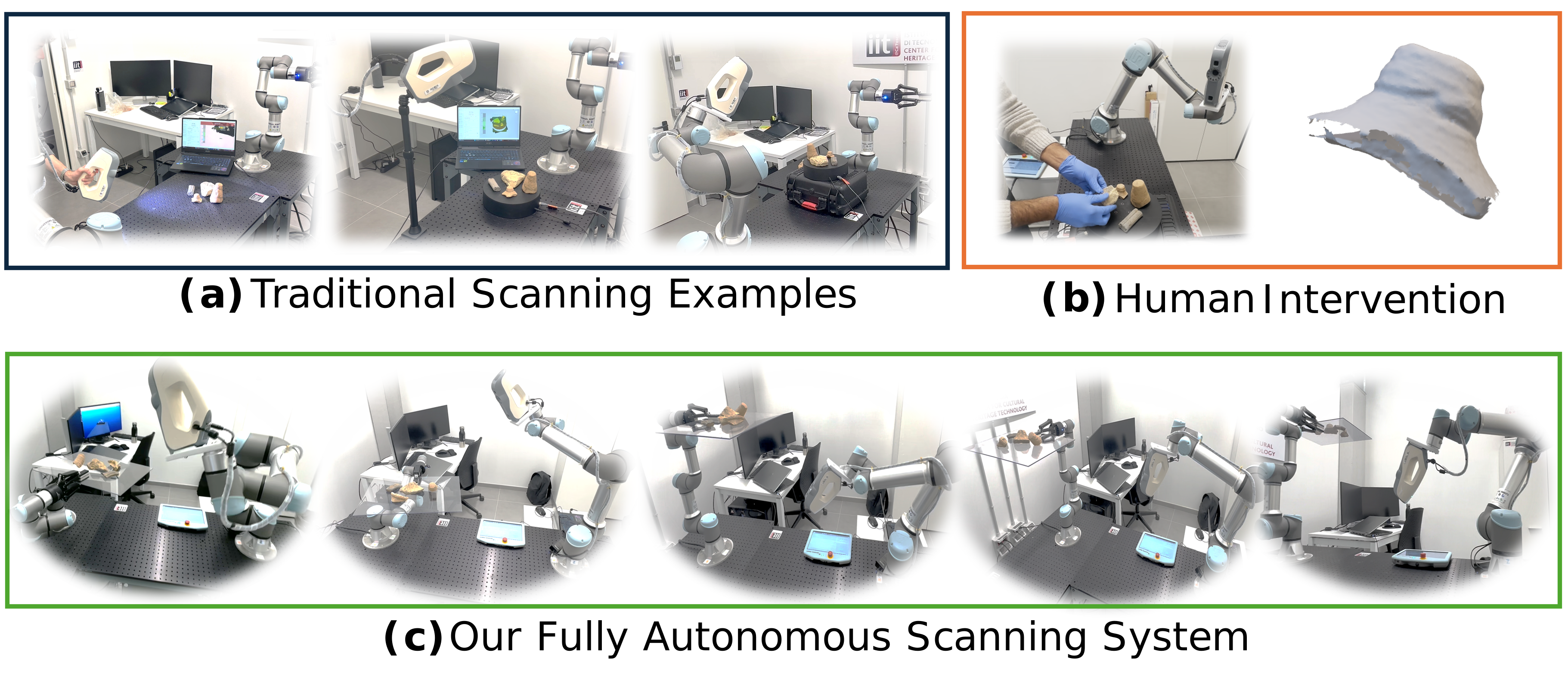

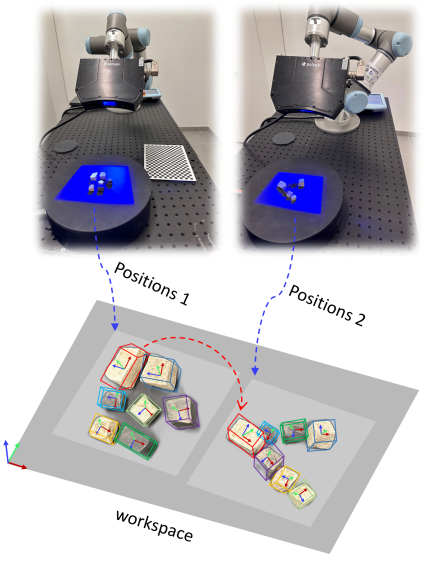

I build end-to-end autonomous systems at the intersection of SLAM, active 3D perception, and robotic platforms (UAVs and mobile manipulation). Previously at the Italian Institute of Technology (ADVR & CCHT), and currently in industry developing drone-based 3D vision systems.